#mongodb configuration

Explore tagged Tumblr posts

Text

#youtube#video#codeonedigest#microservices#microservice#docker#springboot#spring boot#mongodb configuration#mongodb docker install#spring boot mongodb#mongodb compass#mongodb java#mongodb#dockercontainers#docker image#docker container#docker tutorial#dockerfile#spring boot microservices#java microservice#microservice tutorial

0 notes

Text

Graylog Docker Compose Setup: An Open Source Syslog Server for Home Labs

Graylog Docker Compose Install: Open Source Syslog Server for Home #homelab GraylogInstallationGuide #DockerComposeOnUbuntu #GraylogRESTAPI #ElasticsearchAndGraylog #MongoDBWithGraylog #DockerComposeYmlConfiguration #GraylogDockerImage #Graylogdata

A really great open-source log management platform for both production and home lab environments is Graylog. Using Docker Compose, you can quickly launch and configure Graylog for a production or home lab Syslog. Using Docker Compose, you can create and configure all the containers needed, such as OpenSearch and MongoDB. Let’s look at this process. Table of contentsWhat is Graylog?Advantages of…

View On WordPress

#Docker Compose on Ubuntu#docker-compose.yml configuration#Elasticsearch and Graylog#Graylog data persistence#Graylog Docker image#Graylog installation guide#Graylog REST API#Graylog web interface setup#log management with Graylog#MongoDB with Graylog

0 notes

Text

My undergrad operating systems professor was Emin Gün Sirer. Some anecdotes about this guy:

He explained on the first day of class that his assignments were all designed to take at most one week to complete, and so he gave you two weeks, with the understanding that there was absolutely not a chance in hell of any kind of extension beyond the due date, not even if you and your entire extended family were all dying.

He would go on Twitter (and this was in ~2011, long before Twitter was the hellscape it is now) and get in fights with people about Bitcoin and MongoDB, both of which he hated.

One time I submitted an assignment right at the deadline, but to my horror I forgot to make some of the parameters configurable on the CLI as was required, and instead they were hardcoded into the problem. I asked if I could resubmit with that fixed, and he said I could... if I wrote an easy on the importance of configurability and read it out loud in front of our ~40 person lecture. Which I did.

Loved him.

53 notes

·

View notes

Text

Binance clone script — Overview by BlockchainX

A Binance Clone Script is a pre-built, customizable software solution that replicates Binance's features, connect with BlockchainX

What is Binance Clone Script

A Binance clone script refers to the ready-made solution of the Binance platform that deals with core functions parallel to the widely acclaimed cryptocurrency exchange platform associated with Binance. It enables companies to establish their own platforms like Binance, perfectly parameterized in terms of functionality and user interface of world-famous exchanges. The clone script provides display flexibility with built-in functionality such as spot trading software, futures trading configurations, and wallet systems that are extremely secure.

Basically, it reduces development costs and latency because things like these are already built. And as this is a startup for many young entrepreneurs, they can have saved on their capital to expand or grow their business.

The script is blessed as its feature set caters to future demands in the field. One can enjoy a safe trading experience to customers while ensuring that every peculiarity of Binance’s success opens up to investors of the script.

How does the Binance clone script work?

The Binance clone script works to provide a ready-made platform that replicates Binance’s core features, such as user registration, wallet management, trade and enables users to create accounts, deposit or withdraw cryptocurrency, and trade digital assets through an interface easily and safely. The platform supports various trading methods such as market orders, limit orders and forward trading. It has built-in security features like two-factor authentication (2FA) to save the user money. Admin dashboards allow platform owners to manage users, manage tasks, and set up billing. The script can be tailored to your brand, connecting liquidity sources to make trading more efficient. In short, the Binance clone script provides everything needed to create a fully functional crypto exchange.

key features of a Binance Clone Script

The key features of a Binance Clone Script are designed to make your cryptocurrency exchange platform secure, user-friendly, and fully functional. Here’s a simple overview of these features:

User-Friendly Interface

Multi-Currency Support

Advanced Trading Engine

Secure Wallet System

KYC/AML Integration

Admin Dashboard

Security Features

Trading Options

These features help ensure that your Binance-like exchange is efficient, secure, and ready for the growing crypto market.

Technology Stack Used by BlockchainX

Technology stack used for developing the Binance clone script involves the most advanced technology combination that ensures that the platform must have so much security, scalability, and performance to make it a platform that is secure, scalable, and high-performance as well. Here are a few key technologies and their brief descriptions:

Blockchain Technology:

The underlying part of the cryptocurrency exchange is Blockchain because it ensures the safe and decentralized processing of transactions.

Normally executed on either Ethereum or BSC (Binance Smart Chain) to carry out smart contracts and token transfers.

Programming Languages:

Frontend: For frontend, React or Angular could be engaged in actualization of the user interface leading to a responsive and interactive experience on the various devices.

Backend: In backend, languages like Node.js, Python, or Ruby on Rails can be applied on how internal logic is being run by server and arbitration of user interaction with the module is foremost.

Databases:

These two databases, MySQL or Postgresql, are typically used in user information storage, transaction records, and other exchange information.

NoSQL such as MongoDB or other databases might be used for horizontal scalability and high-volume transaction storage.

Smart Contracts:

It is used to generate and send out smart contracts for auto-trading, token generation, and other decentralized functionalities.

Blockchain Wallets:

Fundamentally, this automatically links famous wallet systems such as MetaMask, Trust Wallet, or Ledger for the secure storage and transactions of cryptocurrency.

Advantages of using a Binance Clone Script

Here are the advantages of using a Binance Clone Script:

Faster Time-to-Market

Cost-Effective

Customizable Features

Liquidity Integration

Multiple Trading Options

So, when entering the marketplace of the cryptocurrencies it would be the most possible work of something to pay off at a rapid pace: the Binance Clone Script proves so.

How to Get Started with BlockchainX’s Binance Clone Script

It is quite a straightforward process to begin working with a BlockchainX Binance Clone Script-this involves the first step of getting in touch with the company for an initial consulting period to understand more about what you require, need, or customize for the site, and what your goals are. When BlockchainX has an understanding of your needs, they offer a detailed list of what a proposal would entail before they can start the work; afterward, they will estimate the costs needed to do the project. Once both sides accept both the presentations and all features and timelines are agreed with, BlockchainX starts working on the development process of building a Binance Clone Script tailored to the brand, user interface, and other features.

After the entire platform is created, it passes through severe testing to ensure that everything functions excellently. Deployment follows the thorough test. BlockchainX customizes your user interface and more extensions, after deployment. BlockchainX also commits to supporting and sustaining your exchange so that it runs successfully and securely.

Conclusion:

At the end, your confusion may as well be cut short. Yes, the Binance Clone Script will be a resilient solution to spark up the exchange platforms synthesizing user-generated cryptocurrency dreams in the blockchain, even without bankroll when it comes to developing the app. Turning with BlockchainX expertise, you can make an adjustment and scale a powerful platform stocked with the likes of Binance that produced Blockchains, while still containing some specific set-ups for your masterpiece. More amazing features are exclusive to the clone script, moreover, such as support for multiple currencies, high-end security, real-time data, and a smooth user interface that completes the trading process for your users without any glitch.

This solution gives easy access to ready-made solutions. It could have quality Depending on the time you conveniently let BlockchainX’s be and use both exchanges or any variation of the two permutations. After all, who decides to couple up with a one-experienced Crypto Exchange developer who is struggling to offer anything new.

#binance clone script#binance clone script development#binance clone script development service#blockchain technology#blockchain#cryptocurrency#cryptocurrencies

2 notes

·

View notes

Text

Python FullStack Developer Jobs

Introduction :

A Python full-stack developer is a professional who has expertise in both front-end and back-end development using Python as their primary programming language. This means they are skilled in building web applications from the user interface to the server-side logic and the database. Here’s some information about Python full-stack developer jobs.

Job Responsibilities:

Front-End Development: Python full-stack developers are responsible for creating and maintaining the user interface of a web application. This involves using front-end technologies like HTML, CSS, JavaScript, and various frameworks like React, Angular, or Vue.js.

Back-End Development: They also work on the server-side of the application, managing databases, handling HTTP requests, and building the application’s logic. Python, along with frameworks like Django, Flask, or Fast API, is commonly used for back-end development.

Database Management: Full-stack developers often work with databases like PostgreSQL, MySQL, or NoSQL databases like MongoDB to store and retrieve data.

API Development: Creating and maintaining APIs for communication between the front-end and back-end systems is a crucial part of the job. RESTful and Graph QL APIs are commonly used.

Testing and Debugging: Full-stack developers are responsible for testing and debugging their code to ensure the application’s functionality and security.

Version Control: Using version control systems like Git to track changes and collaborate with other developers.

Deployment and DevOps: Deploying web applications on servers, configuring server environments, and implementing continuous integration/continuous deployment (CI/CD) pipelines.

Security: Ensuring the application is secure by implementing best practices and security measures to protect against common vulnerabilities.

Skills and Qualifications:

To excel in a Python full-stack developer role, you should have the following skills and qualifications:

Proficiency in Python programming.

Strong knowledge of front-end technologies (HTML, CSS, JavaScript) and frameworks.

Expertise in back-end development using Python and relevant web frameworks.

Experience with databases and data modeling.

Knowledge of version control systems (e.g., Git).

Familiarity with web servers and deployment.

Understanding of web security and best practices.

Problem-solving and debugging skills.

Collaboration and teamwork.

Continuous learning and staying up to date with the latest technologies and trends.

Job Opportunities:

Python full-stack developers are in demand in various industries, including web development agencies, e-commerce companies, startups, and large enterprises. Job titles you might come across include Full-Stack Developer, Python Developer, Web Developer, or Software Engineer.

The job market for Python full-stack developers is generally favorable, and these professionals can expect competitive salaries, particularly with experience and a strong skill set. Many companies appreciate the versatility of full-stack developers who can work on both the front-end and back-end aspects of their web applications.

To find Python full-stack developer job opportunities, you can check job boards, company career pages, and professional networking sites like LinkedIn. Additionally, you can work with recruitment agencies specializing in tech roles or attend tech job fairs and conferences to network with potential employers.

Python full stack developer jobs offer a range of advantages to those who pursue them. Here are some of the key advantages of working as a Python full stack developer:

Versatility: Python is a versatile programming language, and as a full stack developer, you can work on both front-end and back-end development, as well as other aspects of web development. This versatility allows you to work on a wide range of projects and tasks.

High demand: Python is one of the most popular programming languages, and there is a strong demand for Python full stack developers. This high demand leads to ample job opportunities and competitive salaries.

Job security: With the increasing reliance on web and mobile applications, the demand for full stack developers is expected to remain high. This job security provides a sense of stability and long-term career prospects.

Wide skill set: As a full stack developer, you gain expertise in various technologies and frameworks for both front-end and back-end development, including Django, Flask, JavaScript, HTML, CSS, and more. This wide skill set makes you a valuable asset to any development team.

Collaboration: Full stack developers often work closely with both front-end and back-end teams, fostering collaboration and communication within the development process. This can lead to a more holistic understanding of projects and better teamwork.

Problem-solving: Full stack developers often encounter challenges that require them to think critically and solve complex problems. This aspect of the job can be intellectually stimulating and rewarding.

Learning opportunities: The tech industry is constantly evolving, and full stack developers have the opportunity to continually learn and adapt to new technologies and tools. This can be personally fulfilling for those who enjoy ongoing learning.

Competitive salaries: Python full stack developers are typically well-compensated due to their valuable skills and the high demand for their expertise. Salaries can vary based on experience, location, and the specific organization.

Entrepreneurial opportunities: With the knowledge and skills gained as a full stack developer, you can also consider creating your own web-based projects or startup ventures. Python’s ease of use and strong community support can be particularly beneficial in entrepreneurial endeavors.

Remote work options: Many organizations offer remote work opportunities for full stack developers, allowing for greater flexibility in terms of where you work. This can be especially appealing to those who prefer a remote or freelance lifestyle.

Open-source community: Python has a vibrant and active open-source community, which means you can easily access a wealth of libraries, frameworks, and resources to enhance your development projects.

Career growth: As you gain experience and expertise, you can advance in your career and explore specialized roles or leadership positions within development teams or organizations.

Conclusion:

Python full stack developer jobs offer a combination of technical skills, career stability, and a range of opportunities in the tech industry. If you enjoy working on both front-end and back-end aspects of web development and solving complex problems, this career path can be a rewarding choice.

Thanks for reading, hopefully you like the article if you want to take Full stack master's course from our Institute, please attend our live demo sessions or contact us: +918464844555 providing you with the best Online Full Stack Developer Course in Hyderabad with an affordable course fee structure.

2 notes

·

View notes

Text

You can learn NodeJS easily, Here's all you need:

1.Introduction to Node.js

• JavaScript Runtime for Server-Side Development

• Non-Blocking I/0

2.Setting Up Node.js

• Installing Node.js and NPM

• Package.json Configuration

• Node Version Manager (NVM)

3.Node.js Modules

• CommonJS Modules (require, module.exports)

• ES6 Modules (import, export)

• Built-in Modules (e.g., fs, http, events)

4.Core Concepts

• Event Loop

• Callbacks and Asynchronous Programming

• Streams and Buffers

5.Core Modules

• fs (File Svstem)

• http and https (HTTP Modules)

• events (Event Emitter)

• util (Utilities)

• os (Operating System)

• path (Path Module)

6.NPM (Node Package Manager)

• Installing Packages

• Creating and Managing package.json

• Semantic Versioning

• NPM Scripts

7.Asynchronous Programming in Node.js

• Callbacks

• Promises

• Async/Await

• Error-First Callbacks

8.Express.js Framework

• Routing

• Middleware

• Templating Engines (Pug, EJS)

• RESTful APIs

• Error Handling Middleware

9.Working with Databases

• Connecting to Databases (MongoDB, MySQL)

• Mongoose (for MongoDB)

• Sequelize (for MySQL)

• Database Migrations and Seeders

10.Authentication and Authorization

• JSON Web Tokens (JWT)

• Passport.js Middleware

• OAuth and OAuth2

11.Security

• Helmet.js (Security Middleware)

• Input Validation and Sanitization

• Secure Headers

• Cross-Origin Resource Sharing (CORS)

12.Testing and Debugging

• Unit Testing (Mocha, Chai)

• Debugging Tools (Node Inspector)

• Load Testing (Artillery, Apache Bench)

13.API Documentation

• Swagger

• API Blueprint

• Postman Documentation

14.Real-Time Applications

• WebSockets (Socket.io)

• Server-Sent Events (SSE)

• WebRTC for Video Calls

15.Performance Optimization

• Caching Strategies (in-memory, Redis)

• Load Balancing (Nginx, HAProxy)

• Profiling and Optimization Tools (Node Clinic, New Relic)

16.Deployment and Hosting

• Deploying Node.js Apps (PM2, Forever)

• Hosting Platforms (AWS, Heroku, DigitalOcean)

• Continuous Integration and Deployment-(Jenkins, Travis CI)

17.RESTful API Design

• Best Practices

• API Versioning

• HATEOAS (Hypermedia as the Engine-of Application State)

18.Middleware and Custom Modules

• Creating Custom Middleware

• Organizing Code into Modules

• Publish and Use Private NPM Packages

19.Logging

• Winston Logger

• Morgan Middleware

• Log Rotation Strategies

20.Streaming and Buffers

• Readable and Writable Streams

• Buffers

• Transform Streams

21.Error Handling and Monitoring

• Sentry and Error Tracking

• Health Checks and Monitoring Endpoints

22.Microservices Architecture

• Principles of Microservices

• Communication Patterns (REST, gRPC)

• Service Discovery and Load Balancing in Microservices

1 note

·

View note

Text

Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370)

In the era of cloud-native transformation, data is the fuel powering everything from mission-critical enterprise apps to real-time analytics platforms. However, as Kubernetes adoption grows, many organizations face a new set of challenges: how to manage persistent storage efficiently, reliably, and securely across distributed environments.

To solve this, Red Hat OpenShift Data Foundation (ODF) emerges as a powerful solution — and the DO370 training course is designed to equip professionals with the skills to deploy and manage this enterprise-grade storage platform.

🔍 What is Red Hat OpenShift Data Foundation?

OpenShift Data Foundation is an integrated, software-defined storage solution that delivers scalable, resilient, and cloud-native storage for Kubernetes workloads. Built on Ceph and Rook, ODF supports block, file, and object storage within OpenShift, making it an ideal choice for stateful applications like databases, CI/CD systems, AI/ML pipelines, and analytics engines.

🎯 Why Learn DO370?

The DO370: Red Hat OpenShift Data Foundation course is specifically designed for storage administrators, infrastructure architects, and OpenShift professionals who want to:

✅ Deploy ODF on OpenShift clusters using best practices.

✅ Understand the architecture and internal components of Ceph-based storage.

✅ Manage persistent volumes (PVs), storage classes, and dynamic provisioning.

✅ Monitor, scale, and secure Kubernetes storage environments.

✅ Troubleshoot common storage-related issues in production.

🛠️ Key Features of ODF for Enterprise Workloads

1. Unified Storage (Block, File, Object)

Eliminate silos with a single platform that supports diverse workloads.

2. High Availability & Resilience

ODF is designed for fault tolerance and self-healing, ensuring business continuity.

3. Integrated with OpenShift

Full integration with the OpenShift Console, Operators, and CLI for seamless Day 1 and Day 2 operations.

4. Dynamic Provisioning

Simplifies persistent storage allocation, reducing manual intervention.

5. Multi-Cloud & Hybrid Cloud Ready

Store and manage data across on-prem, public cloud, and edge environments.

📘 What You Will Learn in DO370

Installing and configuring ODF in an OpenShift environment.

Creating and managing storage resources using the OpenShift Console and CLI.

Implementing security and encryption for data at rest.

Monitoring ODF health with Prometheus and Grafana.

Scaling the storage cluster to meet growing demands.

🧠 Real-World Use Cases

Databases: PostgreSQL, MySQL, MongoDB with persistent volumes.

CI/CD: Jenkins with persistent pipelines and storage for artifacts.

AI/ML: Store and manage large datasets for training models.

Kafka & Logging: High-throughput storage for real-time data ingestion.

👨🏫 Who Should Enroll?

This course is ideal for:

Storage Administrators

Kubernetes Engineers

DevOps & SRE teams

Enterprise Architects

OpenShift Administrators aiming to become RHCA in Infrastructure or OpenShift

🚀 Takeaway

If you’re serious about building resilient, performant, and scalable storage for your Kubernetes applications, DO370 is the must-have training. With ODF becoming a core component of modern OpenShift deployments, understanding it deeply positions you as a valuable asset in any hybrid cloud team.

🧭 Ready to transform your Kubernetes storage strategy? Enroll in DO370 and master Red Hat OpenShift Data Foundation today with HawkStack Technologies – your trusted Red Hat Certified Training Partner. For more details www.hawkstack.com

0 notes

Text

virtual machine

virtual machine

WafaiCloud – Leading Cloud and Web Hosting Solutions in Saudi Arabia

In today’s fast-paced digital landscape, businesses across Saudi Arabia are moving rapidly towards cloud technologies to achieve scalability, security, and high performance. At the forefront of this shift is WafaiCloud, a trusted Saudi-based provider offering cutting-edge solutions including Cloud Servers, Managed Databases, VPS Hosting, and Web Hosting.

Whether you're a startup, a growing e-commerce brand, or a government agency, WafaiCloud delivers reliable and cost-effective infrastructure tailored to meet your needs—fully compliant with local regulations and high-performance standards.

Why Choose WafaiCloud?

WafaiCloud is more than a hosting provider—it's a strategic partner for digital growth. Here's why businesses across Saudi Arabia trust WafaiCloud:

1. Saudi-Based Infrastructure

All our services are hosted on local data centers within the Kingdom of Saudi Arabia. This ensures faster load times, lower latency, and compliance with Saudi data sovereignty laws—making WafaiCloud the ideal partner for businesses that demand performance and privacy.

2. 24/7 Arabic Support

Our bilingual support team is available round-the-clock to assist you in Arabic or English. We understand the unique challenges of the Saudi market and provide real-time local support, not just automated responses.

3. Customizable Solutions for Every Business

From small businesses to enterprise-level organizations, WafaiCloud offers scalable cloud services that grow with your needs. Choose from our flexible plans or request a custom infrastructure package.

Core Services We Offer

1. Cloud Servers in Saudi Arabia

Experience the power of a virtualized environment with WafaiCloud’s Cloud Servers. Our cloud infrastructure is built for speed, uptime, and data security.

Fully redundant, SSD-powered infrastructure

Scale vertically or horizontally on demand

Ideal for SaaS applications, e-commerce, and business-critical operations

API and dashboard access for full control

Our cloud servers are optimized for performance and support advanced configurations such as load balancing, backups, and firewall rules.

2. Managed Database Services

WafaiCloud simplifies database management so you can focus on development and innovation. Our Managed Database services support MySQL, PostgreSQL, and MongoDB.

Fully automated backups and high availability

Zero-downtime scaling

Monitoring, patching, and security updates included

Real-time performance metrics via dashboard

Whether you are building a fintech app or an enterprise CRM, we manage the backend to ensure 99.99% uptime.

3. VPS Hosting in Saudi Arabia

Our Virtual Private Servers (VPS) provide the power of dedicated hosting at a fraction of the cost. Perfect for developers, small businesses, and growing websites.

Root access with full customization

SSD storage and high-performance CPUs

Affordable monthly and annual plans

Ideal for hosting websites, game servers, or development environments

Hosted in local Saudi data centers, our VPS services ensure fast response times for your target audience.

4. Reliable Web Hosting

WafaiCloud’s Web Hosting solutions are ideal for bloggers, entrepreneurs, and small businesses launching their first website.

Easy-to-use cPanel access

Free SSL certificates

One-click WordPress installation

Daily backups and malware protection

Your website deserves to be online 24/7. With 99.9% guaranteed uptime and lightning-fast load speeds, we help you stay competitive in the Saudi digital space.

Built for Saudi Businesses

At WafaiCloud, we understand the needs of Saudi entrepreneurs, tech startups, e-commerce stores, and government clients. That’s why we offer:

Local billing in SAR (Saudi Riyals)

Compliance with CITC and local regulations

Cloud-native architecture optimized for Arabic content and right-to-left (RTL) websites

We make cloud adoption simple, affordable, and reliable—without compromising on quality.

SEO, Speed & Security: All in One Package

Search engine visibility begins with performance. Our hosting services are optimized for SEO and Core Web Vitals, including:

Fast server response time (TTFB)

Secure HTTPS encryption

100% mobile-friendly hosting

Integrated caching and CDN options

Google prioritizes fast, secure websites—so should you.

Getting Started with WafaiCloud

Starting your digital journey is easy with WafaiCloud. Our onboarding team will help you:

Migrate your website or database with zero downtime

Choose the right hosting package

Secure your site with SSL and DDoS protection

Monitor and scale resources from a centralized dashboard

Whether you’re launching a personal blog or a high-traffic portal, WafaiCloud ensures peace of mind with unmatched technical support.

Trusted by Developers & Businesses in Saudi Arabia

Our clients include leading tech startups, educational institutions, and growing e-commerce stores in Riyadh, Jeddah, Dammam, and beyond. They trust us because we offer:

Transparent pricing

No hidden fees

Flexible billing

Local experience and knowledge

Ready to Grow with WafaiCloud?

Make the switch to a faster, safer, and locally compliant cloud provider. WafaiCloud is here to help your business grow in the digital age with expert infrastructure, real-time support, and scalable technology..

Know more about - virtual machine

0 notes

Text

CAP theorem in ML: Consistency vs. availability

New Post has been published on https://thedigitalinsider.com/cap-theorem-in-ml-consistency-vs-availability/

CAP theorem in ML: Consistency vs. availability

The CAP theorem has long been the unavoidable reality check for distributed database architects. However, as machine learning (ML) evolves from isolated model training to complex, distributed pipelines operating in real-time, ML engineers are discovering that these same fundamental constraints also apply to their systems. What was once considered primarily a database concern has become increasingly relevant in the AI engineering landscape.

Modern ML systems span multiple nodes, process terabytes of data, and increasingly need to make predictions with sub-second latency. In this distributed reality, the trade-offs between consistency, availability, and partition tolerance aren’t academic — they’re engineering decisions that directly impact model performance, user experience, and business outcomes.

This article explores how the CAP theorem manifests in AI/ML pipelines, examining specific components where these trade-offs become critical decision points. By understanding these constraints, ML engineers can make better architectural choices that align with their specific requirements rather than fighting against fundamental distributed systems limitations.

Quick recap: What is the CAP theorem?

The CAP theorem, formulated by Eric Brewer in 2000, states that in a distributed data system, you can guarantee at most two of these three properties simultaneously:

Consistency: Every read receives the most recent write or an error

Availability: Every request receives a non-error response (though not necessarily the most recent data)

Partition tolerance: The system continues to operate despite network failures between nodes

Traditional database examples illustrate these trade-offs clearly:

CA systems: Traditional relational databases like PostgreSQL prioritize consistency and availability but struggle when network partitions occur.

CP systems: Databases like HBase or MongoDB (in certain configurations) prioritize consistency over availability when partitions happen.

AP systems: Cassandra and DynamoDB favor availability and partition tolerance, adopting eventual consistency models.

What’s interesting is that these same trade-offs don’t just apply to databases — they’re increasingly critical considerations in distributed ML systems, from data pipelines to model serving infrastructure.

The great web rebuild: Infrastructure for the AI agent era

AI agents require rethinking trust, authentication, and security���see how Agent Passports and new protocols will redefine online interactions.

Where the CAP theorem shows up in ML pipelines

Data ingestion and processing

The first stage where CAP trade-offs appear is in data collection and processing pipelines:

Stream processing (AP bias): Real-time data pipelines using Kafka, Kinesis, or Pulsar prioritize availability and partition tolerance. They’ll continue accepting events during network issues, but may process them out of order or duplicate them, creating consistency challenges for downstream ML systems.

Batch processing (CP bias): Traditional ETL jobs using Spark, Airflow, or similar tools prioritize consistency — each batch represents a coherent snapshot of data at processing time. However, they sacrifice availability by processing data in discrete windows rather than continuously.

This fundamental tension explains why Lambda and Kappa architectures emerged — they’re attempts to balance these CAP trade-offs by combining stream and batch approaches.

Feature Stores

Feature stores sit at the heart of modern ML systems, and they face particularly acute CAP theorem challenges.

Training-serving skew: One of the core features of feature stores is ensuring consistency between training and serving environments. However, achieving this while maintaining high availability during network partitions is extraordinarily difficult.

Consider a global feature store serving multiple regions: Do you prioritize consistency by ensuring all features are identical across regions (risking unavailability during network issues)? Or do you favor availability by allowing regions to diverge temporarily (risking inconsistent predictions)?

Model training

Distributed training introduces another domain where CAP trade-offs become evident:

Synchronous SGD (CP bias): Frameworks like distributed TensorFlow with synchronous updates prioritize consistency of parameters across workers, but can become unavailable if some workers slow down or disconnect.

Asynchronous SGD (AP bias): Allows training to continue even when some workers are unavailable but sacrifices parameter consistency, potentially affecting convergence.

Federated learning: Perhaps the clearest example of CAP in training — heavily favors partition tolerance (devices come and go) and availability (training continues regardless) at the expense of global model consistency.

Model serving

When deploying models to production, CAP trade-offs directly impact user experience:

Hot deployments vs. consistency: Rolling updates to models can lead to inconsistent predictions during deployment windows — some requests hit the old model, some the new one.

A/B testing: How do you ensure users consistently see the same model variant? This becomes a classic consistency challenge in distributed serving.

Model versioning: Immediate rollbacks vs. ensuring all servers have the exact same model version is a clear availability-consistency tension.

Superintelligent language models: A new era of artificial cognition

The rise of large language models (LLMs) is pushing the boundaries of AI, sparking new debates on the future and ethics of artificial general intelligence.

Case studies: CAP trade-offs in production ML systems

Real-time recommendation systems (AP bias)

E-commerce and content platforms typically favor availability and partition tolerance in their recommendation systems. If the recommendation service is momentarily unable to access the latest user interaction data due to network issues, most businesses would rather serve slightly outdated recommendations than no recommendations at all.

Netflix, for example, has explicitly designed its recommendation architecture to degrade gracefully, falling back to increasingly generic recommendations rather than failing if personalization data is unavailable.

Healthcare diagnostic systems (CP bias)

In contrast, ML systems for healthcare diagnostics typically prioritize consistency over availability. Medical diagnostic systems can’t afford to make predictions based on potentially outdated information.

A healthcare ML system might refuse to generate predictions rather than risk inconsistent results when some data sources are unavailable — a clear CP choice prioritizing safety over availability.

Edge ML for IoT devices (AP bias)

IoT deployments with on-device inference must handle frequent network partitions as devices move in and out of connectivity. These systems typically adopt AP strategies:

Locally cached models that operate independently

Asynchronous model updates when connectivity is available

Local data collection with eventual consistency when syncing to the cloud

Google’s Live Transcribe for hearing impairment uses this approach — the speech recognition model runs entirely on-device, prioritizing availability even when disconnected, with model updates happening eventually when connectivity is restored.

Strategies to balance CAP in ML systems

Given these constraints, how can ML engineers build systems that best navigate CAP trade-offs?

Graceful degradation

Design ML systems that can operate at varying levels of capability depending on data freshness and availability:

Fall back to simpler models when real-time features are unavailable

Use confidence scores to adjust prediction behavior based on data completeness

Implement tiered timeout policies for feature lookups

DoorDash’s ML platform, for example, incorporates multiple fallback layers for their delivery time prediction models — from a fully-featured real-time model to progressively simpler models based on what data is available within strict latency budgets.

Hybrid architectures

Combine approaches that make different CAP trade-offs:

Lambda architecture: Use batch processing (CP) for correctness and stream processing (AP) for recency

Feature store tiering: Store consistency-critical features differently from availability-critical ones

Materialized views: Pre-compute and cache certain feature combinations to improve availability without sacrificing consistency

Uber’s Michelangelo platform exemplifies this approach, maintaining both real-time and batch paths for feature generation and model serving.

Consistency-aware training

Build consistency challenges directly into the training process:

Train with artificially delayed or missing features to make models robust to these conditions

Use data augmentation to simulate feature inconsistency scenarios

Incorporate timestamp information as explicit model inputs

Facebook’s recommendation systems are trained with awareness of feature staleness, allowing the models to adjust predictions based on the freshness of available signals.

Intelligent caching with TTLs

Implement caching policies that explicitly acknowledge the consistency-availability trade-off:

Use time-to-live (TTL) values based on feature volatility

Implement semantic caching that understands which features can tolerate staleness

Adjust cache policies dynamically based on system conditions

How to build autonomous AI agent with Google A2A protocol

How to build autonomous AI agent with Google A2A protocol, Google Agent Development Kit (ADK), Llama Prompt Guard 2, Gemma 3, and Gemini 2.0 Flash.

Design principles for CAP-aware ML systems

Understand your critical path

Not all parts of your ML system have the same CAP requirements:

Map your ML pipeline components and identify where consistency matters most vs. where availability is crucial

Distinguish between features that genuinely impact predictions and those that are marginal

Quantify the impact of staleness or unavailability for different data sources

Align with business requirements

The right CAP trade-offs depend entirely on your specific use case:

Revenue impact of unavailability: If ML system downtime directly impacts revenue (e.g., payment fraud detection), you might prioritize availability

Cost of inconsistency: If inconsistent predictions could cause safety issues or compliance violations, consistency might take precedence

User expectations: Some applications (like social media) can tolerate inconsistency better than others (like banking)

Monitor and observe

Build observability that helps you understand CAP trade-offs in production:

Track feature freshness and availability as explicit metrics

Measure prediction consistency across system components

Monitor how often fallbacks are triggered and their impact

Wondering where we’re headed next?

Our in-person event calendar is packed with opportunities to connect, learn, and collaborate with peers and industry leaders. Check out where we’ll be and join us on the road.

AI Accelerator Institute | Summit calendar

Unite with applied AI’s builders & execs. Join Generative AI Summit, Agentic AI Summit, LLMOps Summit & Chief AI Officer Summit in a city near you.

#agent#Agentic AI#agents#ai#ai agent#AI Engineering#ai summit#AI/ML#amp#applications#applied AI#approach#architecture#Article#Articles#artificial#Artificial General Intelligence#authentication#autonomous#autonomous ai#awareness#banking#Behavior#Bias#budgets#Business#cache#Calendar#Case Studies#challenge

0 notes

Text

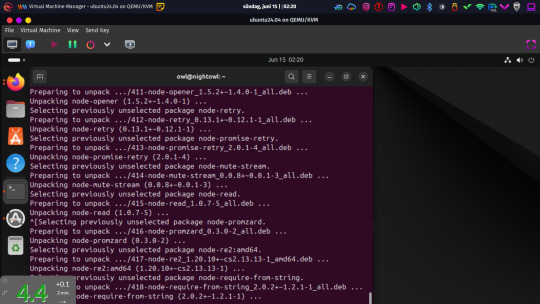

Progress, at least!

I got a VM set up and running, not fullscreen here so I could use my main system to screenshot it. Instead of Ubuntu Server, I decided to go with a minimal install of the desktop version with the GUI for accessibility more than anything else. I don't like Gnome, but I'll live with it. Rarely intend to use that after everything is set up and running anyway. Haven't set up clipboard (or any other) passthrough, so it was convenient having Firefox inside there to paste text in from. Besides some basic system utilities, this essentially just started out with Firefox installed and not much else.

This was while installing what felt like a shitload of other stuff required to get the database and Nightscout set up.

Now I do have both of those installed, and a fresh database with users created for Nightscout in MongoDB . I will have to leave the rest of the configuration and testing for tomorrow. But, at least as of now? Everything does appear to be there and functional. Got something accomplished, at least.

0 notes

Text

hi

Watches for New Files Monitors directories or HCP/S3 for inbound files.

Triggered via scheduled job or Kafka metadata message.

✅ 2. Reads File Content Uses FileManagerAdapter (e.g., LocalFileLoaderAdapter, HCPFileManagerAdapter).

Reads CSV/flat file line-by-line.

✅ 3. Validates File Structure Uses BulkScoringOrderValidationService

Checks for:

Proper batch header/trailer (BH / BT)

Record count consistency

Required fields present

✅ 4. Creates Domain Object Builds a BulkScoringOrder: ///////

This Spring Batch + Kafka microservice ingests raw payment instruction files (like CSV), validates and transforms them into structured scoring records, and sends them to downstream services via Kafka.

Kafka (BulkScoringOrder) ↓ S3: Download files → Batch Job Launched ↓ Step 1: File Header → FileHeaderInfo → Mongo Step 2: Batch Header → BatchHeaderInfo → Mongo Step 3: Instructions → ScoringOrder → Kafka + Mongo + Redis

✅ Core Responsibilities

Stage Purpose Kafka Consumer Listens for file arrival metadata (BulkScoringOrder) S3/Local File Access Reads actual instruction and header files Spring Batch Job Launches the step-based processing flow

Validation + Transformation Maps raw lines to domain objects (ScoringOrder, FileHeaderInfo)

Redis Tracks inflight status (INFLIGHT_PROCESSING, TRANSFORMED, etc.) ///// High-Level Flow Listens to Kafka topic validated-out

Retrieves Account info from Redis

Enriches the Payment object

Persists to MongoDB

Publishes enriched message to Kafka topic enriched-in

/////////////////////////////////////////////////////////////// 🔁 Execution Steps 🟢 1. Kafka message arrives PaymentEnrichmentKafkaClient consumes message from validated-out

Message payload is a Payment object

🧠 2. Enrichment decision Reads isProcessingEnabled flag (@ConfigProperty)

/// Transformation Service – High-Level Overview ✅ 1. Consumes Validated Instructions Reads messages from Kafka topic received-out

Messages contain raw payment data (e.g., ACXInstruction)

✅ 2. Deserializes Dynamically Uses GFDTransformableDeserializer

Detects PaymentType (e.g., ACX) from Kafka headers

Converts to matching instruction class (e.g., ACXInstruction)

✅ 3. Transforms Into Standard Format Invokes InstructionTransformationUseCase

Internally uses InstructionMapperFactory + ACXInstructionMapper

Converts raw input into GFDInstruction

✅ 4. Enriches and Wraps As ScoringOrder Combines GFDInstruction + Kafka headers into ScoringOrder

Adds metadata like gfdId, batchId, and status: TRANSFORMED

/// Kafka Consumer Layer (Adapter) Class: SubmitterKafkaClient

Listens to enriched-out Kafka topic.

Extracts headers using MessageHeaderUtils.

Builds a ScoringOrder domain object.

Invokes PaymentPFDMapper to create the byte stream.

Emits the final message to pfd-request topic.

Updates the transaction status in Redis via InflightOutputPort.

Business Logic Layer Interfaces & Services:

SubmitterUseCase – Defines the core business operation.

SubmitterService – Implements the logic: validate, map, update status.

Domain Layer Main Domain Classes:

ScoringOrder: Root domain object.

GFDInstruction: Enriched transaction info.

TransactionInfo, Addenda, Payee, Address: Subcomponents.

InFlight: Tracks processing state of an instruction. //

The File Egress Service is responsible for moving enriched and processed fraud scoring files to their final destination (e.g., cloud bucket, downstream system) and updating processing status in Redis.

It acts after the Submitter service and before Notification or Export/Audit systems.

🧱 Core Responsibilities Task Details ✅ Listen to Kafka Consumes from egress-requested-out topic. 📦 Move Files Transfers file from input → output bucket (e.g., S3/HCP). 🔄 Update Processing Status Updates Redis with SCORING_FILE_MOVED_TO_OUTPUT_FOLDER. ❌ Handle Missing Files Validates file presence before move. ⚙️ Modular & Configurable Uses Quarkus config injection for paths, buckets, endpoints.

🧩 Architecture Overview pgsql Copy Edit ┌────────────────────────────────────────────┐ │ Kafka Topic: egress-requested-out │ └────────────────────────────────────────────┘ │ ▼ ┌──────────────────────────────────────┐ │ FileEgressKafkaClient │ │ → Triggers moveFile() use case │ └──────────────────────────────────────┘ │ ▼ ┌───────────────────────────────────────┐ │ FileEgressInputPort (UseCase) │ │ - Moves File │ │ - Updates Redis status │ └───────────────────────────────────────┘ │ │ ▼ ▼ ┌────────────────────────┐ ┌────────────────────────────────────┐ │ FileSystemAdapter │ │ InflightRedisAdaptor │ │ - Validates file exists│ │ - Writes status to Redis │

/////////

file-generator service is responsible for:

✅ Listening to Kafka for FraudDecision messages, ✅ Tracking progress of a batch using Redis, ✅ Generating a response file when all decisions for a batch are received, and ✅ Uploading the file to object storage (e.g., HCP or S3).

🔄 High-Level Flow less Copy Edit ┌──────────────────────┐ │ Kafka Topic: │ │ file-collecting-out │ └─────────┬────────────┘ │ ▼ ┌────────────────────────────────────┐ │ file-generator Kafka Consumer │ │ (@Incoming FraudDecision messages) │ └────────────────┬───────────────────┘ │ [Extract gfdId from decision] │ ▼ ┌──────────────────────────────────────────┐ │ Redis (Inflight Tracker) │ │ - total expected children (from earlier)│ │ - increment processed count │ └────────────────┬────────────────────────┘ │ [Check if all children received] │ ▼ ┌──────────────────────────────────────┐ │ Format content for response file │ │ (e.g., line per decision: TXN001|OK) │ └────────────────┬─────────────────────┘ │ ▼ ┌───────────────────────────────────────┐ │ Write file using FileSystemAdapter │ │ - Local path: /scoring/temp/GFD123.txt│ └────────────────┬──────────────────────┘ │ ▼ ┌────────────────────────────────────────────┐ │ Upload file to S3 (HCP) using │ │ HCPFileHandlingAdapter │ └────────────────┬────────────────────────────┘

0 notes

Text

#youtube#video#codeonedigest#microservices#aws#aws ec2 server#aws ec2 instance#aws ec2 service#ec2#aws ec2#mongodb configuration#mongodb docker install#spring boot mongodb#mongodb compass#mongodb java#mongodb

0 notes

Text

The Science and Art of Web Development: Constructing the Internet Future

By Namrata Universal

No longer an option, but a requirement for having a strong internet presence in today's quickly changing internet era. You are a small business owner, an artist, or a giant corporation, and your website is sometimes the first impression a potential consumer gets of your company. That is where web development comes into play. It's not about creating a pretty page; it's about building an interesting, engaging, and useful spot on the web with a purpose and meeting the needs of the users.

Site development is all about creating from plain text-based websites to intricate web-based applications, social network applications, and electronic business applications. It is the backbone on which every web-based portal is built and plays an important part in shaping the modern digital experience.

What is Website Development? Web development is work undertaken in building websites to host on an intranet or the internet. Work undertaken includes web design, content creation for the web, client-side/server-side scripting, and network security configurations, among others.

Web development is usually divided into two broad categories

Frontend Development (Client-Side): What the users see. It includes everything that they see on a web page—layout, fonts, colors, menus, and forms. The frontend developers code in HTML, CSS, and JavaScript to create the visual look of the site.

Backend Development (Server-Side): This includes everything that goes on behind the scenes. It includes databases, servers, and application logic that enable the frontend. PHP, Python, Ruby, Node.js, and database technologies MySQL and MongoDB are some of the backend technologies.

The Website Development Process Planning and Research: Even before you sit down physically to code, you need to define the intent of the website, audience, and main goals. Competitor analysis and keeping up with the latest UX trends also happen at this stage.

Designing the Layout: Designers create wireframes and prototypes through tools like Adobe XD, Figma, or Sketch. It makes sure that the UI is accessible and brand compliant.

Development Here starts the coding of the website. Frontend developers bring the design to life and backend developers make everything work seamlessly. Mobile responsiveness as well as cross-browser compatibility are included in this process as well.

Testing and Launch: A lot of testing is done to fix bugs, enhance the loading speed of the page, and make the site user-friendly. After making sure that everything is optimal, the site is launched to a live server.

Maintenance and Updates: A site is never really "done." Periodic updates, performance checks, and content refreshes are necessary to maintain it current and secure.

Why Website Development Matters First Impressions Count: Your site is often the first destination for prospective clients. A professionally developed site helps build credibility and fosters user engagement.

Increased Accessibility: A well-built website makes sure it is easy for everybody, including those with disabilities, to be able to access your content, increasing your reach and accessibility.

SEO Optimization: Search Engine Optimization (SEO) is also a crucial aspect of web building. Clean codebase, quicker loading, and mobile-friendliness are all elements that lead to better search rankings.

Scalability and Performance: A well-designed website can handle more traffic and activity from users without freezing, hence scalable for business growth.

Better Conversion Rates: Simple-to-use experience and quick-loading interface reduce bounce rates and enhance the potential of converting visitors to customers.

Trends in Modern Web Design Progressive Web Applications (PWAs): These offer the web and mobile app best and offline support, along with fast performance, and native-app feel.

Voice Search Optimization: With voice assistants now at hand, websites need to be optimized for voice search queries as well.

AI and Chatbots: There are AI on most websites today offering personalized user experiences or immediate support when they have chatbots.

Single Page Applications (SPA): SPAs load content dynamically on one page without refreshing the page, offering faster interactions.

Motion UI: Smooth animations and transitions cement user interactions and extend the brand narrative.

Skills for a Website Developer An effective web developer is both analytical and innovative. Some of the core skills are:

HTML, CSS, JavaScript proficiency

Understanding of libraries like React, Angular, or Vue.js

Knowledge of server-side scripting languages like PHP, Python, or Node.js

Database management (MySQL, MongoDB)

Version control tools like Git

Excellent problem-solving and debugging abilities

Good sense of aesthetics and user interface Conclusion Web development is the foundation of the modern digital experience. It gets things done and connects people, businesses, and services together on a global level. Because the web is always evolving, so are web development skills. By embracing the creative and technical forces, developers can build not only sites, but compelling, inclusive, and visionary digital experiences.

We at Namrata Universal believe that you have the potential and businesses can just take their ideas online with confidence. You might be starting from scratch or looking to enhance your current web presence, and investing in professional web development is a step towards long-term success online.

Let your website be the window to your world—make it well-designed and wisely constructed.

1 note

·

View note

Text

Cloud Database and DBaaS Market in the United States entering an era of unstoppable scalability

Cloud Database And DBaaS Market was valued at USD 17.51 billion in 2023 and is expected to reach USD 77.65 billion by 2032, growing at a CAGR of 18.07% from 2024-2032.

Cloud Database and DBaaS Market is experiencing robust expansion as enterprises prioritize scalability, real-time access, and cost-efficiency in data management. Organizations across industries are shifting from traditional databases to cloud-native environments to streamline operations and enhance agility, creating substantial growth opportunities for vendors in the USA and beyond.

U.S. Market Sees High Demand for Scalable, Secure Cloud Database Solutions

Cloud Database and DBaaS Market continues to evolve with increasing demand for managed services, driven by the proliferation of data-intensive applications, remote work trends, and the need for zero-downtime infrastructures. As digital transformation accelerates, businesses are choosing DBaaS platforms for seamless deployment, integrated security, and faster time to market.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/6586

Market Keyplayers:

Google LLC (Cloud SQL, BigQuery)

Nutanix (Era, Nutanix Database Service)

Oracle Corporation (Autonomous Database, Exadata Cloud Service)

IBM Corporation (Db2 on Cloud, Cloudant)

SAP SE (HANA Cloud, Data Intelligence)

Amazon Web Services, Inc. (RDS, Aurora)

Alibaba Cloud (ApsaraDB for RDS, ApsaraDB for MongoDB)

MongoDB, Inc. (Atlas, Enterprise Advanced)

Microsoft Corporation (Azure SQL Database, Cosmos DB)

Teradata (VantageCloud, ClearScape Analytics)

Ninox (Cloud Database, App Builder)

DataStax (Astra DB, Enterprise)

EnterpriseDB Corporation (Postgres Cloud Database, BigAnimal)

Rackspace Technology, Inc. (Managed Database Services, Cloud Databases for MySQL)

DigitalOcean, Inc. (Managed Databases, App Platform)

IDEMIA (IDway Cloud Services, Digital Identity Platform)

NEC Corporation (Cloud IaaS, the WISE Data Platform)

Thales Group (CipherTrust Cloud Key Manager, Data Protection on Demand)

Market Analysis

The Cloud Database and DBaaS Market is being shaped by rising enterprise adoption of hybrid and multi-cloud strategies, growing volumes of unstructured data, and the rising need for flexible storage models. The shift toward as-a-service platforms enables organizations to offload infrastructure management while maintaining high availability and disaster recovery capabilities.

Key players in the U.S. are focusing on vertical-specific offerings and tighter integrations with AI/ML tools to remain competitive. In parallel, European markets are adopting DBaaS solutions with a strong emphasis on data residency, GDPR compliance, and open-source compatibility.

Market Trends

Growing adoption of NoSQL and multi-model databases for unstructured data

Integration with AI and analytics platforms for enhanced decision-making

Surge in demand for Kubernetes-native databases and serverless DBaaS

Heightened focus on security, encryption, and data governance

Open-source DBaaS gaining traction for cost control and flexibility

Vendor competition intensifying with new pricing and performance models

Rise in usage across fintech, healthcare, and e-commerce verticals

Market Scope

The Cloud Database and DBaaS Market offers broad utility across organizations seeking flexibility, resilience, and performance in data infrastructure. From real-time applications to large-scale analytics, the scope of adoption is wide and growing.

Simplified provisioning and automated scaling

Cross-region replication and backup

High-availability architecture with minimal downtime

Customizable storage and compute configurations

Built-in compliance with regional data laws

Suitable for startups to large enterprises

Forecast Outlook

The market is poised for strong and sustained growth as enterprises increasingly value agility, automation, and intelligent data management. Continued investment in cloud-native applications and data-intensive use cases like AI, IoT, and real-time analytics will drive broader DBaaS adoption. Both U.S. and European markets are expected to lead in innovation, with enhanced support for multicloud deployments and industry-specific use cases pushing the market forward.

Access Complete Report: https://www.snsinsider.com/reports/cloud-database-and-dbaas-market-6586

Conclusion

The future of enterprise data lies in the cloud, and the Cloud Database and DBaaS Market is at the heart of this transformation. As organizations demand faster, smarter, and more secure ways to manage data, DBaaS is becoming a strategic enabler of digital success. With the convergence of scalability, automation, and compliance, the market promises exciting opportunities for providers and unmatched value for businesses navigating a data-driven world.

Related reports:

U.S.A leads the surge in advanced IoT Integration Market innovations across industries

U.S.A drives secure online authentication across the Certificate Authority Market

U.S.A drives innovation with rapid adoption of graph database technologies

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

Mail us: [email protected]

#Cloud Database and DBaaS Market#Cloud Database and DBaaS Market Growth#Cloud Database and DBaaS Market Scope

0 notes

Text

Docker Tutorial for Beginners: Learn Docker Step by Step

What is Docker?

Docker is an open-source platform that enables developers to automate the deployment of applications inside lightweight, portable containers. These containers include everything the application needs to run—code, runtime, system tools, libraries, and settings—so that it can work reliably in any environment.

Before Docker, developers faced the age-old problem: “It works on my machine!” Docker solves this by providing a consistent runtime environment across development, testing, and production.

Why Learn Docker?

Docker is used by organizations of all sizes to simplify software delivery and improve scalability. As more companies shift to microservices, cloud computing, and DevOps practices, Docker has become a must-have skill. Learning Docker helps you:

Package applications quickly and consistently

Deploy apps across different environments with confidence

Reduce system conflicts and configuration issues

Improve collaboration between development and operations teams

Work more effectively with modern cloud platforms like AWS, Azure, and GCP

Who Is This Docker Tutorial For?

This Docker tutorial is designed for absolute beginners. Whether you're a developer, system administrator, QA engineer, or DevOps enthusiast, you’ll find step-by-step instructions to help you:

Understand the basics of Docker

Install Docker on your machine

Create and manage Docker containers

Build custom Docker images

Use Docker commands and best practices

No prior knowledge of containers is required, but basic familiarity with the command line and a programming language (like Python, Java, or Node.js) will be helpful.

What You Will Learn: Step-by-Step Breakdown

1. Introduction to Docker

We start with the fundamentals. You’ll learn:

What Docker is and why it’s useful

The difference between containers and virtual machines

Key Docker components: Docker Engine, Docker Hub, Dockerfile, Docker Compose

2. Installing Docker

Next, we guide you through installing Docker on:

Windows

macOS

Linux

You’ll set up Docker Desktop or Docker CLI and run your first container using the hello-world image.

3. Working with Docker Images and Containers

You’ll explore:

How to pull images from Docker Hub

How to run containers using docker run

Inspecting containers with docker ps, docker inspect, and docker logs

Stopping and removing containers

4. Building Custom Docker Images

You’ll learn how to:

Write a Dockerfile

Use docker build to create a custom image

Add dependencies and environment variables

Optimize Docker images for performance

5. Docker Volumes and Networking

Understand how to:

Use volumes to persist data outside containers

Create custom networks for container communication

Link multiple containers (e.g., a Node.js app with a MongoDB container)

6. Docker Compose (Bonus Section)

Docker Compose lets you define multi-container applications. You’ll learn how to:

Write a docker-compose.yml file

Start multiple services with a single command

Manage application stacks easily

Real-World Examples Included

Throughout the tutorial, we use real-world examples to reinforce each concept. You’ll deploy a simple web application using Docker, connect it to a database, and scale services with Docker Compose.

Example Projects:

Dockerizing a static HTML website

Creating a REST API with Node.js and Express inside a container

Running a MySQL or MongoDB database container

Building a full-stack web app with Docker Compose

Best Practices and Tips

As you progress, you’ll also learn:

Naming conventions for containers and images

How to clean up unused images and containers

Tagging and pushing images to Docker Hub

Security basics when using Docker in production

What’s Next After This Tutorial?

After completing this Docker tutorial, you’ll be well-equipped to:

Use Docker in personal or professional projects

Learn Kubernetes and container orchestration

Apply Docker in CI/CD pipelines

Deploy containers to cloud platforms

Conclusion

Docker is an essential tool in the modern developer's toolbox. By learning Docker step by step in this beginner-friendly tutorial, you’ll gain the skills and confidence to build, deploy, and manage applications efficiently and consistently across different environments.

Whether you’re building simple web apps or complex microservices, Docker provides the flexibility, speed, and scalability needed for success. So dive in, follow along with the hands-on examples, and start your journey to mastering containerization with Docker tpoint-tech!

0 notes

Text

Database hosting

WafaiCloud – Leading Cloud and Web Hosting Solutions in Saudi Arabia

In today’s fast-paced digital landscape, businesses across Saudi Arabia are moving rapidly towards cloud technologies to achieve scalability, security, and high performance. At the forefront of this shift is WafaiCloud, a trusted Saudi-based provider offering cutting-edge solutions including Cloud Servers, Managed Databases, VPS Hosting, and Web Hosting.

Whether you're a startup, a growing e-commerce brand, or a government agency, WafaiCloud delivers reliable and cost-effective infrastructure tailored to meet your needs—fully compliant with local regulations and high-performance standards.

Why Choose WafaiCloud?

WafaiCloud is more than a hosting provider—it's a strategic partner for digital growth. Here's why businesses across Saudi Arabia trust WafaiCloud:

1. Saudi-Based Infrastructure

All our services are hosted on local data centers within the Kingdom of Saudi Arabia. This ensures faster load times, lower latency, and compliance with Saudi data sovereignty laws—making WafaiCloud the ideal partner for businesses that demand performance and privacy.

2. 24/7 Arabic Support

Our bilingual support team is available round-the-clock to assist you in Arabic or English. We understand the unique challenges of the Saudi market and provide real-time local support, not just automated responses.

3. Customizable Solutions for Every Business

From small businesses to enterprise-level organizations, WafaiCloud offers scalable cloud services that grow with your needs. Choose from our flexible plans or request a custom infrastructure package.

Core Services We Offer

1. Cloud Servers in Saudi Arabia

Experience the power of a virtualized environment with WafaiCloud’s Cloud Servers. Our cloud infrastructure is built for speed, uptime, and data security.

Fully redundant, SSD-powered infrastructure

Scale vertically or horizontally on demand

Ideal for SaaS applications, e-commerce, and business-critical operations

API and dashboard access for full control

Our cloud servers are optimized for performance and support advanced configurations such as load balancing, backups, and firewall rules.

2. Managed Database Services

WafaiCloud simplifies database management so you can focus on development and innovation. Our Managed Database services support MySQL, PostgreSQL, and MongoDB.

Fully automated backups and high availability

Zero-downtime scaling

Monitoring, patching, and security updates included

Real-time performance metrics via dashboard

Whether you are building a fintech app or an enterprise CRM, we manage the backend to ensure 99.99% uptime.

3. VPS Hosting in Saudi Arabia

Our Virtual Private Servers (VPS) provide the power of dedicated hosting at a fraction of the cost. Perfect for developers, small businesses, and growing websites.

Root access with full customization

SSD storage and high-performance CPUs

Affordable monthly and annual plans

Ideal for hosting websites, game servers, or development environments

Hosted in local Saudi data centers, our VPS services ensure fast response times for your target audience.

4. Reliable Web Hosting

WafaiCloud’s Web Hosting solutions are ideal for bloggers, entrepreneurs, and small businesses launching their first website.

Easy-to-use cPanel access

Free SSL certificates

One-click WordPress installation

Daily backups and malware protection

Your website deserves to be online 24/7. With 99.9% guaranteed uptime and lightning-fast load speeds, we help you stay competitive in the Saudi digital space.

Built for Saudi Businesses

At WafaiCloud, we understand the needs of Saudi entrepreneurs, tech startups, e-commerce stores, and government clients. That’s why we offer:

Local billing in SAR (Saudi Riyals)

Compliance with CITC and local regulations

Cloud-native architecture optimized for Arabic content and right-to-left (RTL) websites

We make cloud adoption simple, affordable, and reliable—without compromising on quality.

SEO, Speed & Security: All in One Package

Search engine visibility begins with performance. Our hosting services are optimized for SEO and Core Web Vitals, including:

Fast server response time (TTFB)

Secure HTTPS encryption

100% mobile-friendly hosting

Integrated caching and CDN options

Google prioritizes fast, secure websites—so should you.

Getting Started with WafaiCloud

Starting your digital journey is easy with WafaiCloud. Our onboarding team will help you:

Migrate your website or database with zero downtime

Choose the right hosting package

Secure your site with SSL and DDoS protection

Monitor and scale resources from a centralized dashboard

Whether you’re launching a personal blog or a high-traffic portal, WafaiCloud ensures peace of mind with unmatched technical support.

Trusted by Developers & Businesses in Saudi Arabia

Our clients include leading tech startups, educational institutions, and growing e-commerce stores in Riyadh, Jeddah, Dammam, and beyond. They trust us because we offer:

Transparent pricing

No hidden fees

Flexible billing

Local experience and knowledge

Ready to Grow with WafaiCloud?

Make the switch to a faster, safer, and locally compliant cloud provider. WafaiCloud is here to help your business grow in the digital age with expert infrastructure, real-time support, and scalable technology..

Know more about - Database hosting

0 notes